Whitepaper

The Next Mile In Cloud Waste

In an article by Jay Chapel on DevOps.com, he cites that over 90% of organizations will use public cloud services in 2022. In fact, public cloud customers will spend more than $50 billion on Infrastructure as a Service (IaaS) from providers such as AWS, Azure and Google. This boom is, of course, due to the broader adoption of public cloud services, as many shops strives to simplify the operations management of their compute resources. However, it is also caused by the expansion of infrastructure within existing accounts as organic growth keeps pace with growth of the business. Not surprisingly, the growth in cloud spending often exceeds the growth in business. The sad truth is that a huge portion of what companies are spending on the cloud is simply unnecessary waste.

Migration To Cloud Continues

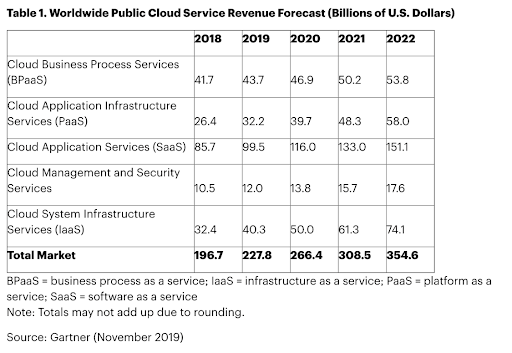

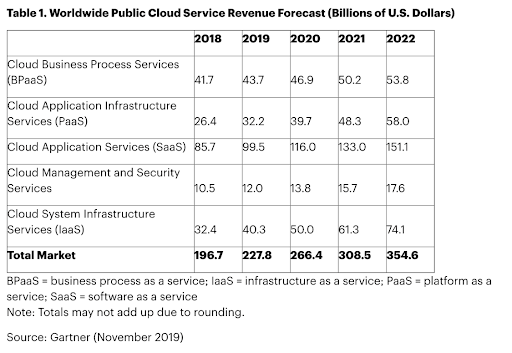

Gartner recently predicted that cloud services spending will grow 17% in 2020 and will reach $266.4 billion. While Software as a Service (SaaS) makes up the largest market segment at $116 billion, the fastest growing portion of cloud spend will continue to be IaaS. This will increase 24% year-over-year and surpass $50 billion in 2020.

What we typically observe is that about two-thirds of the average enterprise public cloud bill is spent on compute, which means about $33.3 billion this year will be spent on compute resources. Unfortunately, this portion of a cloud bill is particularly vulnerable to wasted spend, but it’s not the only area where waste abounds.

Cloud Expertise Matures - At What Pace?

As cloud computing growth continues and cloud users mature, along with that their skills in managing and optimizing of their cloud consumption. we might hope that this $50 billion is being put to optimal use. While we do find that cloud customers are more aware of the potential for wasted spending than they were just a few years ago, this does not seem to be correlated with cost optimized infrastructure from the beginning. The fact is, it’s simply not a priority in many shops to stem this issue; partly because they are not aware of the scope of the waste.

An example of the scope of waste cited by Jay Chapel on DevOps.com is “one healthcare IT provider was found to be wasting up to $5.24 million annually on their cloud spend—an average of more than $1,000 per resource per year.

Idle Resources: VMs and instances being paid for by the hour, minute or second, that are not actually being used 24/7. Typically, these are non-production resources being used for development, staging, testing and QA. Based on data collected from our users, about 44% of their compute spend is on non-production resources. Most non-production resources are only used during a 40-hour work week, and do not need to run 24/7. That means that for the other 128 hours of the week (76%), the resources sit idle, but are still paid for. So, I find the following wasted spend from idle resources: $33.3 billion in compute spend, times 0.44 non-production, times 0.76 of week idle, equals to $11 billion wasted on idle cloud resources in 2020.

Overprovisioned Resources: Another source of wasted cloud spend is overprovisioned infrastructure—that is, paying for resources are larger in capacity than needed. That means you’re paying for resource capacity you’re rarely (or never) using. About 40% of instances are sized at least one size larger than needed for their workloads. Just by reducing an instance by one size, the cost is reduced by 50%. Downsizing by two sizes saves 75%. The data I see in client infrastructure confirms this—and the problem may well be even larger. Infrastructure I see has an average CPU utilization of 4.9%. Of course, this could be skewed by the fact that resources I deal with are more commonly for non-production resources. However, it still paints a picture of gross underutilization, ripe for rightsizing and optimization. To take a conservative estimate of 40% of resources oversized by just one size, we see the following: $33 billion dollars in compute spend, times 0.4 oversized, times 0.5 overspend per oversized resource, equals to $6.6 billion wasted on oversized resources in 2020.

Overprovisioned Resources: Another source of wasted cloud spend is overprovisioned infrastructure—that is, paying for resources are larger in capacity than needed. That means you’re paying for resource capacity you’re rarely (or never) using. About 40% of instances are sized at least one size larger than needed for their workloads. Just by reducing an instance by one size, the cost is reduced by 50%. Downsizing by two sizes saves 75%. The data I see in client infrastructure confirms this—and the problem may well be even larger. Infrastructure I see has an average CPU utilization of 4.9%. Of course, this could be skewed by the fact that resources I deal with are more commonly for non-production resources. However, it still paints a picture of gross underutilization, ripe for rightsizing and optimization. To take a conservative estimate of 40% of resources oversized by just one size, we see the following: $33 billion dollars in compute spend, times 0.4 oversized, times 0.5 overspend per oversized resource, equals to $6.6 billion wasted on oversized resources in 2020.

Cloud Waste - Concern for all stakeholders

Cloud adoption has exploded over the past decade or so, and for good reason. Many digital transformation advancements – and even the complete reimagination of entire industries – can be directly mapped and attributed to cloud innovation. While this rapid pace of innovation has had a profound impact on businesses and how we connect with our customers and end users, the journey to the cloud isn’t all sunshine and rainbows.

Along with increased efficiency, accelerated innovation, and added flexibility comes an exponential increase in complexity, which can make managing and securing cloud-based applications and workloads a daunting challenge. This added complexity can make it difficult to maintain visibility into what’s running across your cloud(s).

There are many stakeholders that should be concerned about cloud waste: FinOps and Finance departments are generally more inclined to try and get these costs under control and cloud engineers are often the ones tasked with managing the process.

User-defined cost allocation tagging can help teams map cloud costs directly to an application or other cloud resource according to particular features, products, teams or individuals. This way, those responsible for creating a particular resource are also accountable to rightsized them when they are overprovisioned or turn them off when they’re unused.

Getting visibility into usage is another key component for managing waste. Most cloud providers have their own tools that give a thorough analysis of resources running in a particular environment as well as actual usage. By taking advantage of these tools, teams can understand what adjustments they need to make to their resources.

Beyond management challenges, organizations often run into massive increases in IT costs as they scale. Whether from neglecting to shut down old resources when they are no longer needed or over-provisioning them from the beginning to avoid auto-scaling issues, cloud waste and overspend are among the most prevailing challenges that organizations face when adopting and accelerating cloud consumption.

Just how prevalent is this issue? Well, according to recent survey of cloud enterprises in 2022, nearly 60% of cloud decision-makers say optimizing their cloud usage to cut costs is a top priority for this year.

The cost benefits of reducing waste can be massive but knowing where to look and what the most common culprits of waste can be a challenge, particularly if your organization are relative novices when it comes to cloud.

The Opaque Leaks

Cloud waste is what happens when cloud services remain unused or underused. Cloud waste is becoming an important concern for companies that lease software-as-a-service (SaaS), platform-as-a-service (PaaS) and infrastructure-as-a-service (IaaS) cloud services from public cloud providers.

Cloud waste occurs when employees overestimate what services will be required to support a specific business goal. In a large corporation, when multiple business divisions use their own budgets to purchase cloud services, it’s easy for cloud waste to go unnoticed. The IT research firm Gartner estimates that in 2021, cloud waste cost businesses approximately $26.6 billion.

Often, companies underestimate how much wasted cloud spend they incur. The problem is this. Many organizations often have little to no idea how much they are spending on the cloud. The last thing you want is to lose up to 35% of your cloud budget because you can’t see your cloud budget leaking thousands of dollars every week. You need to perform a cloud cost analysis.

If you are an Amazon Web Services (AWS) customer, you can use several free AWS native cost tools to determine how much cloud resources you consume. You can use AWS Cost Explorer, Cloudtrail, and AWS Cost and Usage Report to get a high-level view of your overall spending.

However, these basic tools may not enable you to see granular cost insights, such as how much a software testing project costs over a specific period. To slice and dice an AWS bill into unit cost insights, such as cost per customer, cost per team, and cost per deployment project, you need a cloud cost intelligence approach that will link cloud costs to key business activities.

Using such insights, you can more easily identify which cost centers you can limit or reduce to decrease cloud costs without deteriorating cloud performance or stifling innovation.

Often, companies underestimate how much wasted cloud spend they incur. The problem is this. Many organizations often have little to no idea how much they are spending on the cloud. The last thing you want is to lose up to 35% of your cloud budget because you can’t see your cloud budget leaking thousands of dollars every week. You need to perform a cloud cost analysis.

If you are an Amazon Web Services (AWS) customer, you can use several free AWS native cost tools to determine how much cloud resources you consume. You can use AWS Cost Explorer, Cloudtrail, and AWS Cost and Usage Report to get a high-level view of your overall spending.

However, these basic tools may not enable you to see granular cost insights, such as how much a software testing project costs over a specific period. To slice and dice an AWS bill into unit cost insights, such as cost per customer, cost per team, and cost per deployment project, you need a cloud cost intelligence approach that will link cloud costs to key business activities.

Using such insights, you can more easily identify which cost centers you can limit or reduce to decrease cloud costs without deteriorating cloud performance or stifling innovation.

Typically, within the first two years of onboarding an operation to the cloud, sophisticate shops would have achieved the cost management described above. That is a milestone worthy of celebration as that can already save shops 15-25% on their initial cloud cost. Even with such achievements, there is another level of optimization that is worthy of pursuit. This is in the area of application services that embed cloud compute. Snowflake is a great example of such as they are one of the more prevalent cloud applications that many shops rely on for the BI and Analytics.

Snowflake is a very powerful data engine which can power complex analytics and simplify scalability and performance challenges. That said, it is an opaque container for cloud spend in their application. Snowflake inherently is a resource manager in itself, but unlike the AWS platform, the utilization and efficiency monitoring pales in comparison. This makes optimizing efforts to trim waste a much more challenging endeavor. For companies that are data and analytic driven the Snowflake spend can rival the cloud compute spend. In these cases, investment needs to be made to mitigate the waste that is inevitably within the Snowflake cloud spend.

Our Caerus product is design specifically to unravel the next compute spend/optimization problem that Snowflake presents. Caerus has algorithms which projects optimal resource utilization for each Snowflake request and dynamically manages workload to Snowflake minimize resource wastage. The framework that Caerus utilizes to perform the necessary analysis to ensure optimality is so powerful that it lends itself to provide a lot of useful but tedious calcs/formatting/data fabrication that makes the output of Snowflake that much more self-serve ready for information workers to consume without further assistance from IT or Data Engineers.